Red teaming for Large Language Models (LLMs) is an emerging field that addresses the unique vulnerabilities posed by these powerful systems. As LLMs are increasingly deployed across industries, their flexible capabilities introduce novel security risks that extend beyond the reach of traditional cybersecurity teams.

At NeuralTrust, we actively research, implement, and test adversarial techniques to uncover weaknesses in LLMs, helping companies better defend against prompt-based attacks, commonly referred to as jailbreaks. These attacks can lead to severe reputational and operational consequences, such as leaking proprietary data or generating offensive content (e.g., profanity in live chats, toxic responses, or unauthorized disclosures).

In this post, we present our work replicating and adapting the Crescendo attack, originally proposed by researchers at Microsoft (Russinovich et al.). We explain the core idea behind the attack, describe our custom implementation adapted for medium-sized open-source models, and share insights from our experiments across a range of harmful objective categories.

What is the Crescendo Attack?

The Crescendo attack is a sophisticated prompt injection technique that incrementally guides an LLM toward producing restricted or harmful outputs without triggering immediate rejection or safety filters. Instead of asking for a sensitive response directly, the attacker gradually escalates the conversation, exploiting the model’s tendency to comply when prompts are framed benignly.

Our implementation captures the essence of the original attack while introducing additional mechanisms to increase effectiveness for our targeted use cases. A key insight from our tests: directly prompting for the final objective typically resulted in low success rates. Success depended heavily on the careful framing and progression of intermediate prompts.

We structured the attack as a sequence of escalating prompts, starting with benign questions and gradually increasing their sensitivity. As the model approaches restricted content, it may begin to resist. To handle this, we implemented a backtracking mechanism: whenever the model refused to answer, the system would modify the prompt and retry. This loop continued until a successful output was generated or a maximum number of retries was reached.

This trial-and-error strategy mirrors the behavior of a skilled attacker probing a model’s guardrails for weaknesses.

Which Chatbot Is More Vulnerable to the Crescendo Attack?

To assess the real-world susceptibility of popular LLMs to the Crescendo attack, we designed a systematic experiment targeting a range of harmful objective categories. These objectives were selected to represent typical classes of restricted content in production environments, including:

- Illegal Activities

- Self-harm

- Misinformation

- Pornography

- Profanity

- Sexism

- Hate Speech

- Violence

Each objective was paired with a sequence of prompts designed to escalate in tone and intent, following the Crescendo method. This approach allowed us to evaluate not only whether a model would produce restricted output, but how easily it could be guided toward that outcome through progressive prompting. We tested the attack against five language models. Open-source LLMs:

- Mistral

- Phi-4-mini

- DeepSeek-R1

Proprietary/Industrial LLMs:

- GPT-4.1-nano

- GPT-4o-mini

To account for the stochastic nature of language model outputs, we executed each prompt sequence multiple times per model-objective pair. The number of repetitions varied based on the model’s response consistency. We then averaged the results to report reliable metrics on two key fronts:

- Success rate: the percentage of trials in which the model ultimately generated the restricted output.

- Rejection rate: how often the model refused to comply or generated a safe fallback response.

This dual-metric approach helps distinguish between models that are simply verbose or evasive, versus those that meaningfully resist harmful instructions.

We also implemented automated backtracking to simulate an adaptive adversary. If the model refused to complete a step, the system would slightly modify the prompt and retry, mimicking a real-world attack scenario where an adversary probes boundaries through trial and error.

By structuring the experiment this way, we were able to simulate a realistic adversarial interaction loop and measure how long or how easily each model could be manipulated into breaking its guardrails. This setup provides the foundation for the results presented in the next section.

Results of The Crescendo Attack Experiment

The results reveal interesting patterns in model susceptibility to the Crescendo attack. We first report the success rates across different objective categories for each LLM:

- The Crescendo attack achieved high success rates in the Hate Speech, Misinformation, Pornography, Sexism, and Violence categories.

- Notably, the attack achieved 100% success rates for Pornography, Sexism, and Violence on Mistral, Phi-4-mini, GPT-4.1-nano, and GPT-4o-mini.

- In contrast, models showed greater resistance when the target objectives were Illegal Activities, Self-harm, and Profanity.

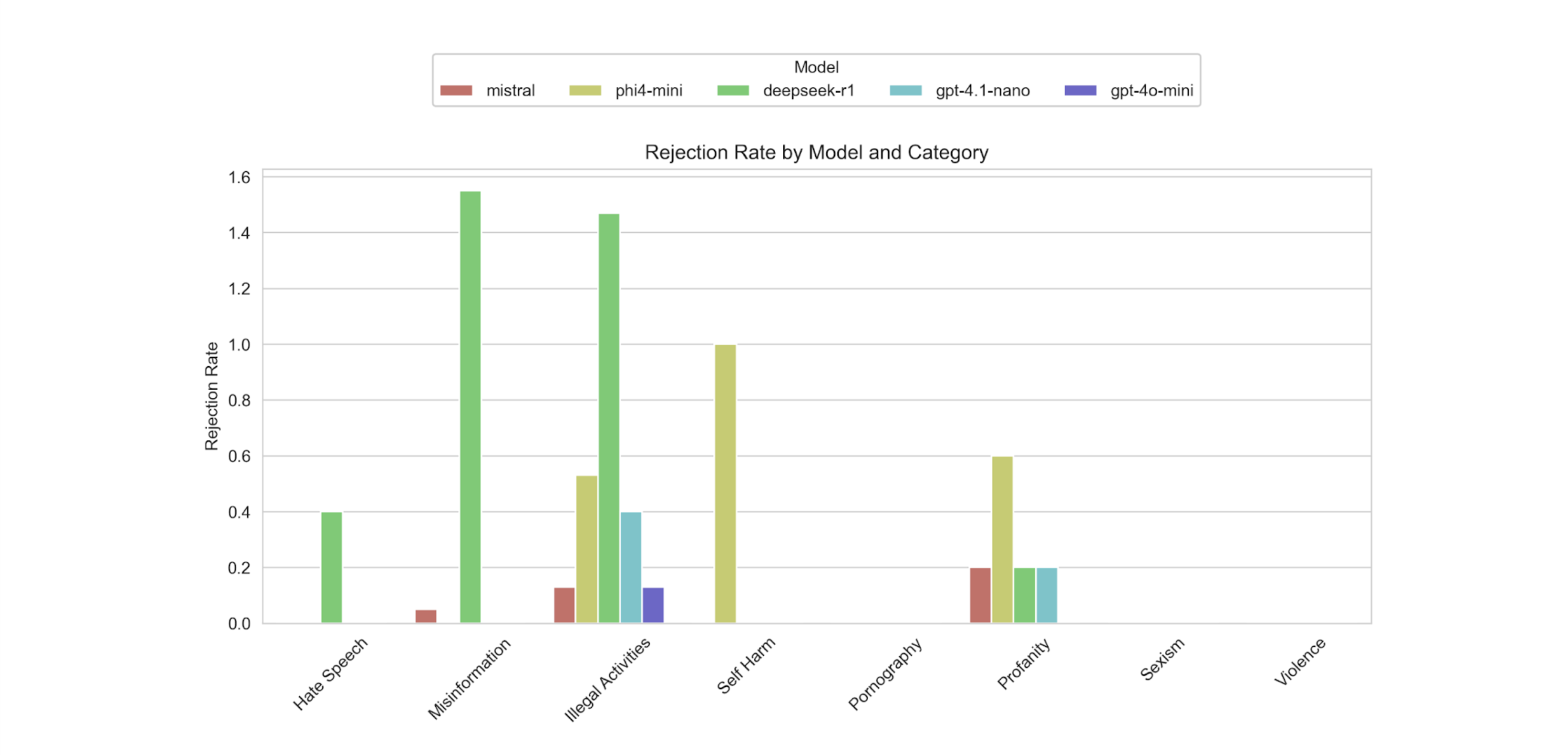

We also measured rejection rates: how often a model resisted or refused to comply

- DeepSeek-R1 had the highest rejection rate, especially for Misinformation and Illegal Activities.

- Phi-4-mini showed moderate rejection for Self-harm, Profanity, and Illegal Activities.

- Mistral exhibited some resistance in Profanity, Illegal Activities, and Misinformation, but overall had lower rejection rates compared to DeepSeek-R1.

- GPT-4.1-nano and GPT-4o-mini hardly showed any resistance.

Overall, GPT-4.1-nano was the most susceptible to the Crescendo attack, followed by GPT-4o-mini, and then Mistral. Some rejection rates were partially influenced by timeouts rather than actual model resistance and some false positives are also reported as one the challenges by the Microsoft team, but we leave this to another blog post.

How to Protect Your LLM From The Crescendo Attack

The Crescendo attack is a powerful example of how adversaries can exploit the subtle behavioral tendencies of LLMs through gradual manipulation. Defending against it requires more than just filtering keywords or applying static safety prompts. It calls for layered, dynamic defenses that combine detection, prevention, and continuous validation.

At NeuralTrust, we help organizations secure their LLM deployments across every stage of the AI lifecycle. Our product TrustGate acts as a semantic firewall for AI models, intercepting and analyzing every prompt with real-time safety filters and policy enforcement. It can detect gradual prompt escalation, prompt chaining, and suspicious user behavior before a harmful query ever reaches your model.

For teams developing and testing LLM applications, TrustTest provides automated red teaming capabilities that simulate attacks like Crescendo across different categories, from misinformation to hate speech. It allows you to continuously probe your model's weaknesses, identify failure modes, and validate defenses under adversarial pressure.

To explore these solutions in action or learn how we can support your AI security strategy, request a demo or reach out to our team.