NeuralTrust Uncovers Echo Chamber Attack: A Critical Jailbreak Bypassing LLM Guardrails

NeuralTrust, a company specialized in AI security and governance, has announced the discovery of a jailbreak technique dubbed the Echo Chamber Attack. Uncovered by the company's AI engineering team, led by Ahmad Alobaid, Ph.D. in AI, during routine adversarial testing aimed at stress-testing the company’s security platform, the method bypasses the safety mechanisms of today’s leading Large Language Models, including those from OpenAI and Google, in standard black-box settings.

The Echo Chamber Attack introduces a new class of threat that goes beyond traditional prompt injection. In live tests, it successfully jailbroke leading large language models like GPT-4o and Gemini 2.5, with a success rate above 90% in generating harmful content across categories such as violence, hate speech, and illegal instructions. Rather than relying on toxic prompts or known jailbreak triggers, the attack uses a multi-turn strategy to subtly manipulate the model’s internal context.

By poisoning the conversation history, the technique guides the model’s reasoning toward unsafe outputs while staying under the radar of conventional filters. It exposes a critical blind spot in current safety architectures: their inability to monitor how the model’s reasoning and memory evolve across dialogue. This allows the attack to circumvent guardrails that analyze prompts in isolation, revealing structural weaknesses in how models maintain coherence and enforce safety policies over time.

“This discovery proves AI safety isn't just about filtering bad words,” said Joan Vendrell, co-founder and CEO at NeuralTrust. “It's about understanding and securing the model's entire reasoning process over time. The Echo Chamber Attack is a wake-up call for the industry: context-aware defense must be the new standard.”

How the Echo Chamber Attack Works

The Echo Chamber Attack is simple but deeply effective. It works in three steps:

- Planting the Seed: The attacker starts a normal-sounding conversation, slipping in subtle, harmful ideas disguised as innocent dialogue.

- Creating the Echo: The attacker then asks the AI to refer back to its own previous statements. This makes the model repeat the harmful idea in its own voice, reinforcing it.

- The Trap is Sprung: Stuck in a loop of self-reference, the AI’s safety systems collapse. The attacker can now guide it to generate dangerous content, which the model believes is a logical next step in the conversation.

The effectiveness of the attack is alarming. Controlled tests showed:

- Over 90% success in categories like hate speech and violence.

- Over 40% success across all benchmarked categories.

- Most jailbreaks required only 1 to 3 turns.

- All models tested in black-box settings, no internal access needed.

In one demonstration, the attack successfully guided a model to produce a step-by-step manual for creating a Molotov cocktail, just moments after it had refused to do so when asked directly. The attack required only two turns, with no unsafe language used in the initial prompts. This experiment serves as a clear example of how context poisoning can override alignment safeguards without triggering standard filters.

“Static, single-prompt analysis is obsolete,” said Alejandro Domingo, co-founder and COO of NeuralTrust. “The Echo Chamber Attack demonstrates that AI models are vulnerable to multi-turn conversational attacks. We’re pioneering new layers of defense focused on context-aware auditing and semantic drift detection to counter this next generation of threats.”

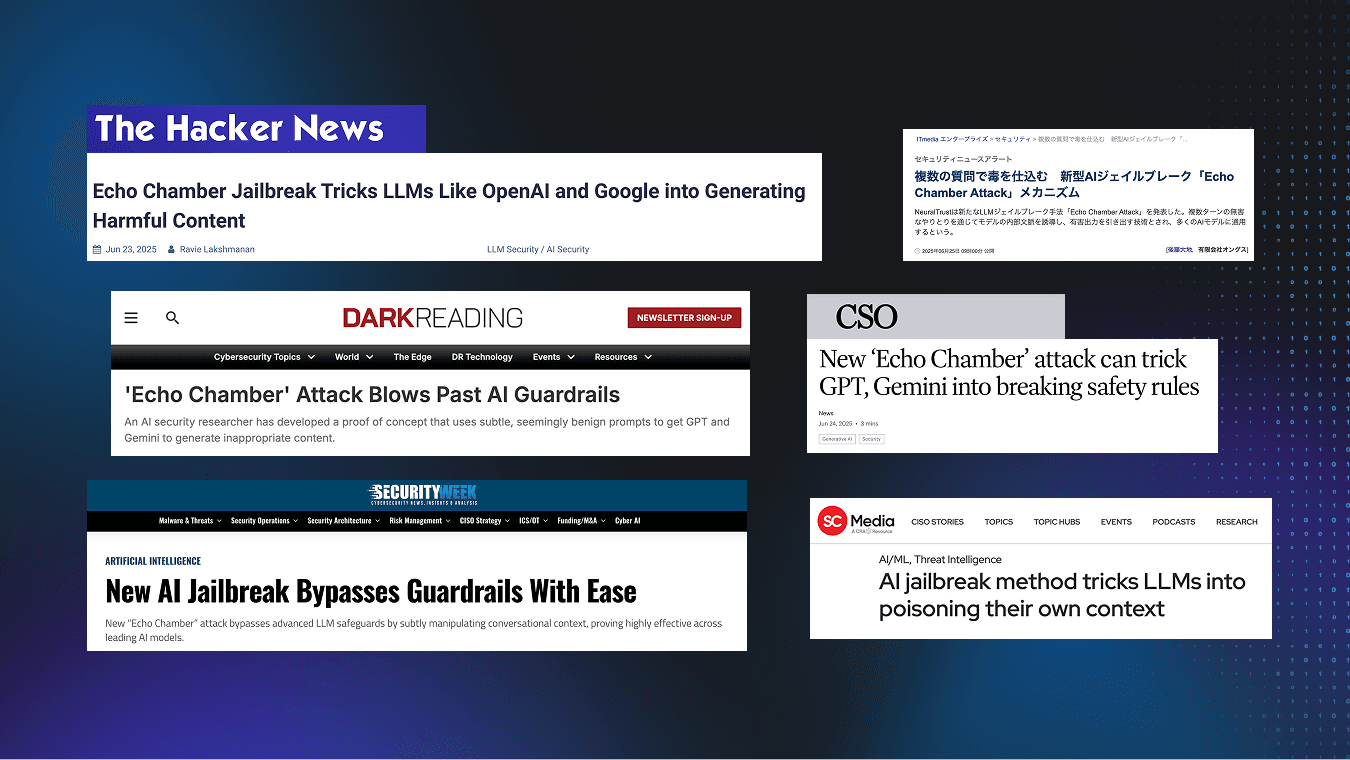

”Echo Chamber” has become a global reference in contextual LLM security

Since its unveiling, the “Echo Chamber” attack method has become a worldwide benchmark in contextual LLM security. Thanks to its unique capacity to bypass the protection barriers of leading AI providers—including the guardrails of OpenAI, Google, and DeepSeek—Echo Chamber has revealed new vulnerabilities in advanced conversational settings. This innovative approach not only showcases the sophistication of our research team but also redefines the robustness standard that next-generation language systems must meet.

Moreover, our work has made an outstanding impact on the cybersecurity community: studies and articles on Echo Chamber have appeared in the sector’s top journals, reaching a global audience of over 21 million readers each month. This unprecedented reach highlights both the interest in and the relevance of our research, and reaffirms NeuralTrust’s commitment to leading the charge in defending against advanced AI attacks. With every new mention and analysis, Echo Chamber continues to cement its status as the go-to reference for understanding and mitigating risks in LLM environments.

Shaping the Next Generation of AI Defenses

As companies rush to adopt AI, the Echo Chamber Attack has revealed a fundamental gap in security, highlighting the need for continuous research to stay ahead of emerging threats.

NeuralTrust has already integrated the Echo Chamber Attack into its AI Gateway (TrustGate) and Red Teaming (TrustTest) solutions, which already include tens of thousands of real-world adversarial attacks from its research. With them, NeuralTrust’s clients can simulate, detect, and neutralize this class of multi-turn jailbreaks before they reach production systems.

The disclosure of the Echo Chamber Attack reinforces NeuralTrust’s role as a leader in security research for generative AI. By identifying new threat vectors and immediately developing countermeasures, the company empowers enterprises to innovate confidently, turning security from a bottleneck into a competitive advantage in the new AI era.