AI agents are quickly becoming essential across modern enterprises. They manage customer interactions, automate business processes, and make autonomous decisions based on live data. But as these systems gain independence, they also expand an organization’s attack surface. Each new agent represents a potential entry point for manipulation or data exposure.

AI agent security is about ensuring these autonomous systems remain safe, reliable, and aligned with organizational policies. Unlike static AI models, agents can plan, act, and communicate on their own, which makes them harder to predict and protect.

As adoption accelerates, businesses need clear answers to three questions:

- How do we identify and monitor every agent in our infrastructure?

- How do we enforce boundaries so agents act only within authorized limits?

- How do we detect and respond when something goes wrong?

This guide explains what AI agent security means, why it matters now, and how forward-looking teams are building architectures that make agentic AI both scalable and trustworthy.

What is AI Agent Security

AI agent security refers to the protection of autonomous or semi-autonomous systems that make decisions, execute actions, and interact with users or other software on behalf of an organization. These agents differ from standard machine learning models because they operate continuously, call external tools, and exchange data dynamically in real time.

Securing them involves more than traditional application security. It requires protecting the entire agent lifecycle, from development and deployment to monitoring and decommissioning. This includes managing the integrity of training data, verifying how agents interpret instructions, and ensuring that each action follows clearly defined policies.

A central principle of AI agent security is treating agents as nonhuman identities. Each one must have its own authentication credentials, access permissions, and audit trail, just like a human user. Without identity boundaries, it becomes impossible to trace or contain abnormal behavior once agents begin interacting with external systems.

Consider a support assistant that accesses customer data, issues refunds, and updates account records. If this agent is compromised, an attacker could trigger unauthorized transactions or extract sensitive data. Protecting such systems means controlling not only who can talk to the agent, but also what the agent itself can do.

In short, AI agent security is about establishing visibility, control, and accountability across autonomous systems. It ensures that agents remain trustworthy digital workers that operate within the limits of policy, intent, and compliance.

Types of AI agents and their security implications

AI agents vary widely in complexity and autonomy. Understanding these differences helps determine how to secure them effectively. The more freedom an agent has to make decisions, the more critical its safeguards become.

Simple and model-based reflex agents

Simple reflex agents follow predefined rules. They respond to specific triggers, such as preset customer queries, without understanding broader context. Their main risks stem from configuration errors and API misuse. If an attacker manipulates inputs or changes routing parameters, the agent might expose internal data or trigger unintended actions.

Model-based reflex agents introduce prediction and limited awareness. They can evaluate short-term outcomes before acting, which improves performance but also increases the risk of logic manipulation. Attackers can target how these agents evaluate context, forcing them into incorrect decision paths. Defensive measures include strict input validation, rule auditing, and continuous performance testing.

Examples

- Password reset assistant: sends a secure reset link after basic checks

- Ticket router: puts each email or chat into the right support queue

- Document sorter: classifies files and moves them to the correct folder

- Priority tagger: marks tickets urgent or normal based on short context

- PII scrubber: finds and redacts personal data before saving

Goal-based and utility-based agents

Goal-based agents act according to defined objectives rather than fixed inputs. They translate goals such as “deliver package safely” or “maximize efficiency” into chains of tasks. The challenge lies in goal alignment. If objectives are poorly defined or influenced by malicious prompts, the agent may take undesirable shortcuts, such as ignoring policy rules to achieve efficiency.

Utility-based agents go further by weighing multiple outcomes to choose the most beneficial one. Their decision space is wider and harder to predict, which introduces contextual drift, a gradual deviation from acceptable behavior. Preventing drift requires ongoing evaluation of decision boundaries, combined with runtime checks that verify whether chosen actions remain within approved limits.

Examples

- Returns assistant: verifies an order, approves a refund within limits, updates CRM

- Meeting scheduler: finds a time that works, books a room, sends invites

- Onboarding coordinator: creates accounts, assigns permissions, delivers a welcome pack

- Pricing recommender: chooses a discount that meets margin and conversion targets

- Delivery planner: selects a route that hits time and cost goals

Learning agents

Learning agents continually adapt based on feedback. They integrate components such as a learner, critic, and performance model to refine decisions over time. While this self-improvement makes them powerful, it also opens the door to data poisoning and feedback manipulation. Adversaries can inject misleading samples or corrupt feedback loops, altering how the agent behaves.

Securing learning agents involves dataset integrity controls, feedback validation, and retraining audits. Logging every learning event allows analysts to trace the origin of behavioral changes and detect tampering early.

Examples

- Sales email optimizer: tests subject lines, learns from replies, improves templates

- Fraud rule tuner: adjusts thresholds from real outcomes while keeping false positives low

- Support answer improver: learns from satisfaction scores to refine responses

- Warehouse route planner: updates pick paths from live sensor and congestion data

The evolving threat landscape for AI agents

AI agents expand the attack surface of modern organizations. Unlike static applications, they can reason, act, and interact with other systems, which exposes new ways for adversaries to manipulate them. Understanding how these attacks work is essential to building effective defenses.

Data leakages

Data leakage in AI Agents is the unintended exposure or exfiltration of sensitive information through an agent’s prompts, context, tools, memory, or logs. It occurs when an agent retrieves overbroad data, echoes secrets from prior interactions, or forwards confidential fields to external systems. The impact can range from minor disclosure to full record exfiltration.

For example, a support agent that uses retrieval could pull an internal incident report into a customer reply, exposing personal identifiers and account data. Because sensitive fields can ride along in context, tool parameters, or observability data, detection is difficult.

Mitigation involves least privilege on data and tools with field level scopes and DLP with redaction on both inputs and outputs. Keep sources allowlisted and retrieval depth limited, isolate context per session, and sanitize logs. Use continuous monitoring for unusual data flows and validation checks around exports, emails, and file shares.

Prompt injection

Prompt injection in AI Agents exploits the open-ended nature of AI inputs. Attackers craft messages that alter an agent’s instructions or override policies. In direct attacks, they use explicit text commands; in indirect attacks, they embed hidden instructions in files, emails, or websites that the agent processes.

Multimodal agents face greater risk since prompts can hide in images, audio, or PDFs. Once executed, these instructions can make agents disclose secrets or perform actions beyond their authorization.

Preventing this requires input validation, context isolation, and prompt filtering. Agents should never treat external content as trusted without verification, and their tool access should remain scoped to essential functions.

Agent Tool misuse

In these attacks, adversaries exploit an agent’s integrations to trigger unintended or unauthorized actions. By manipulating prompts, context, or environment signals, they induce the agent to invoke tools outside their intended scope, such as sending emails, moving files, editing records, or fetching overbroad data.

A well known method is function parameter injection, where the attacker steers the agent to craft tool arguments that change recipients, destinations, or filters, which leads to data exfiltration or privilege escalation.

Defensive measures include least privilege scopes for each tool, strict allowlists, schema and type validation on arguments, pre and post action checks, transaction limits and rate limiting, DLP on tool inputs and outputs, and complete logging for detection and investigation.

Supply chain and dependency risks

Agents rely on multiple components, including open-source libraries, APIs, and connectors. Compromised dependencies can introduce backdoors that activate under certain triggers while appearing normal otherwise.

For example, an autonomous vehicle using a tainted perception module might fail to recognize stop signs under specific lighting. These backdoors are hard to detect without ongoing validation.

Mitigation includes dependency signing, supply chain scanning, and continuous code integrity checks. Every package or connector should have a verified origin and checksum before integration.

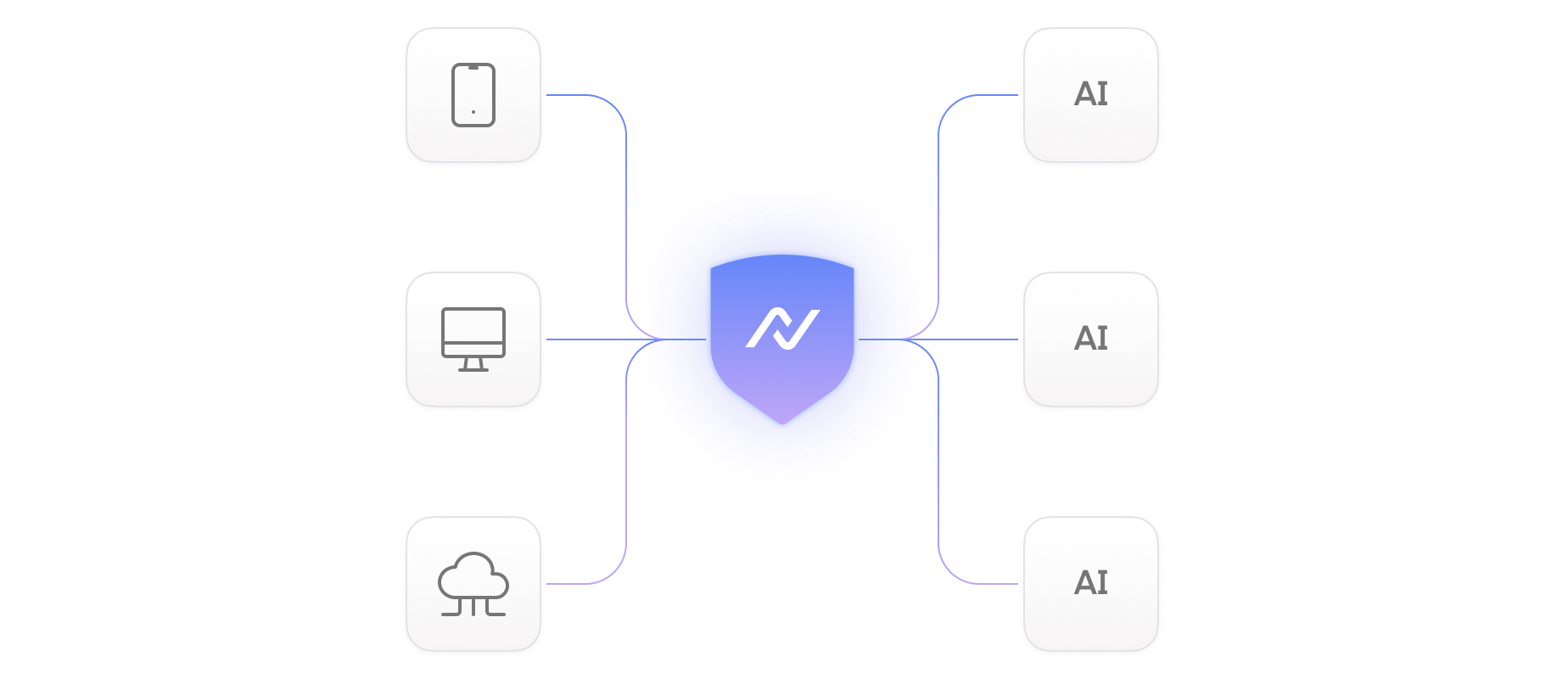

Building a secure AI agent architecture

A secure AI agent architecture combines strong identity boundaries, controlled tool access, and continuous observability. Because agents operate autonomously, safeguards must function even when human supervision is limited. The goal is to maintain predictable behavior, auditable actions, and minimal exposure across every interaction.

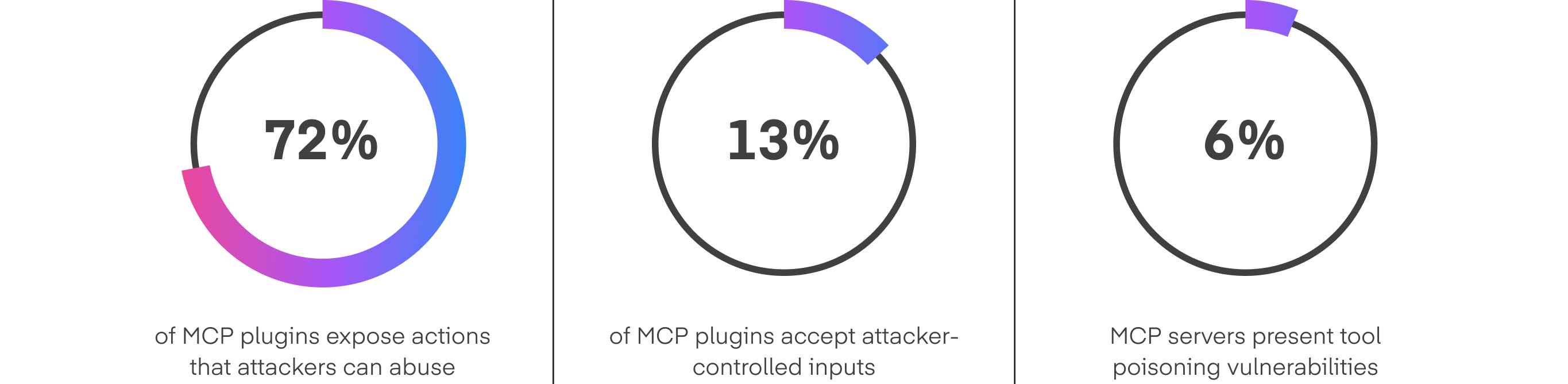

Tool security and compliance with MCP & Model Scanner

Agents often rely on third-party or custom-built tools that can introduce hidden vulnerabilities. The MCP & Model Scanner continuously audits these tool integrations for misconfigurations, outdated dependencies, or unsafe permissions.

It scans both static configurations and live tool behavior to detect anomalies that could lead to injection, data leakage, or privilege abuse. Integrating this layer into the deployment pipeline ensures that only verified and compliant tools are available to the agent runtime.

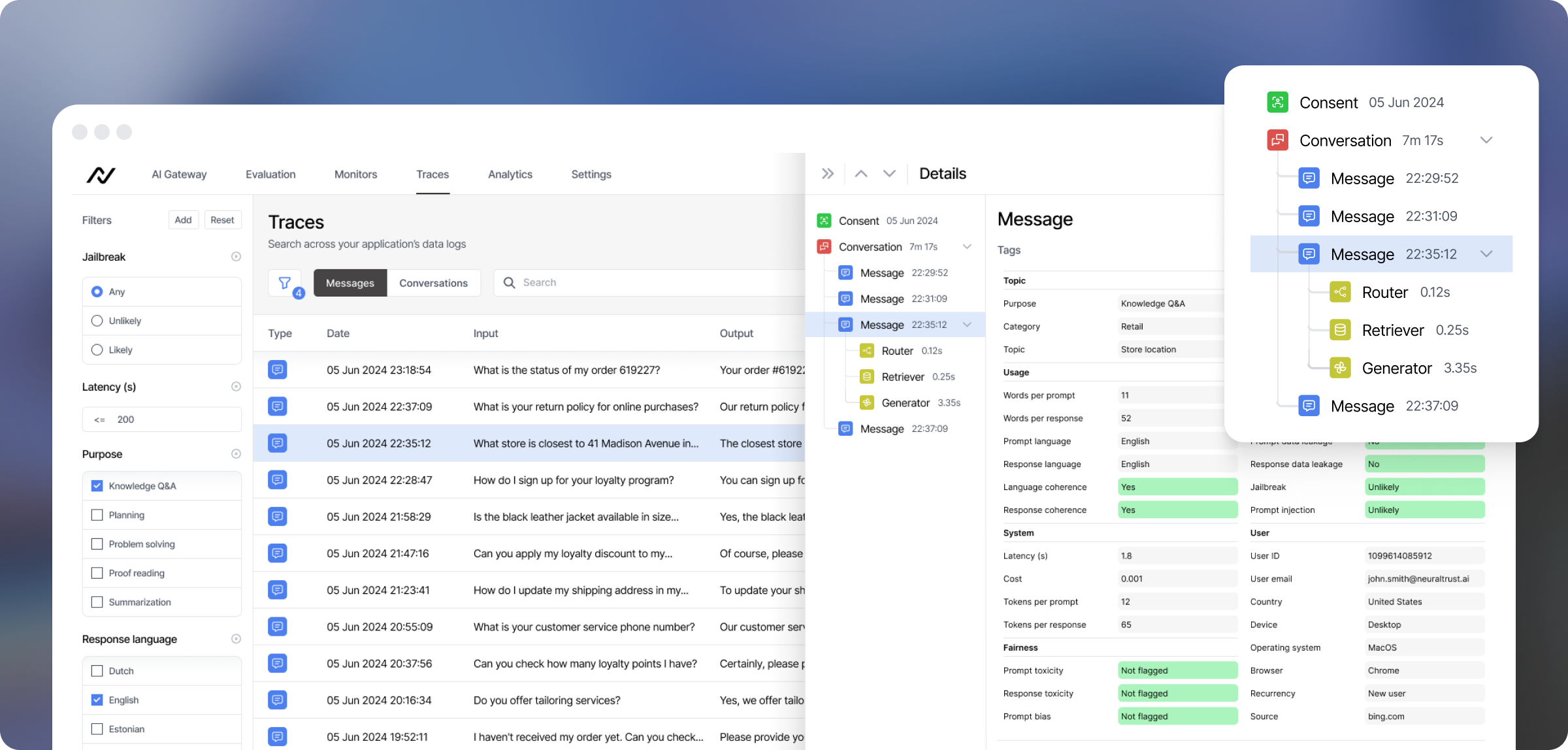

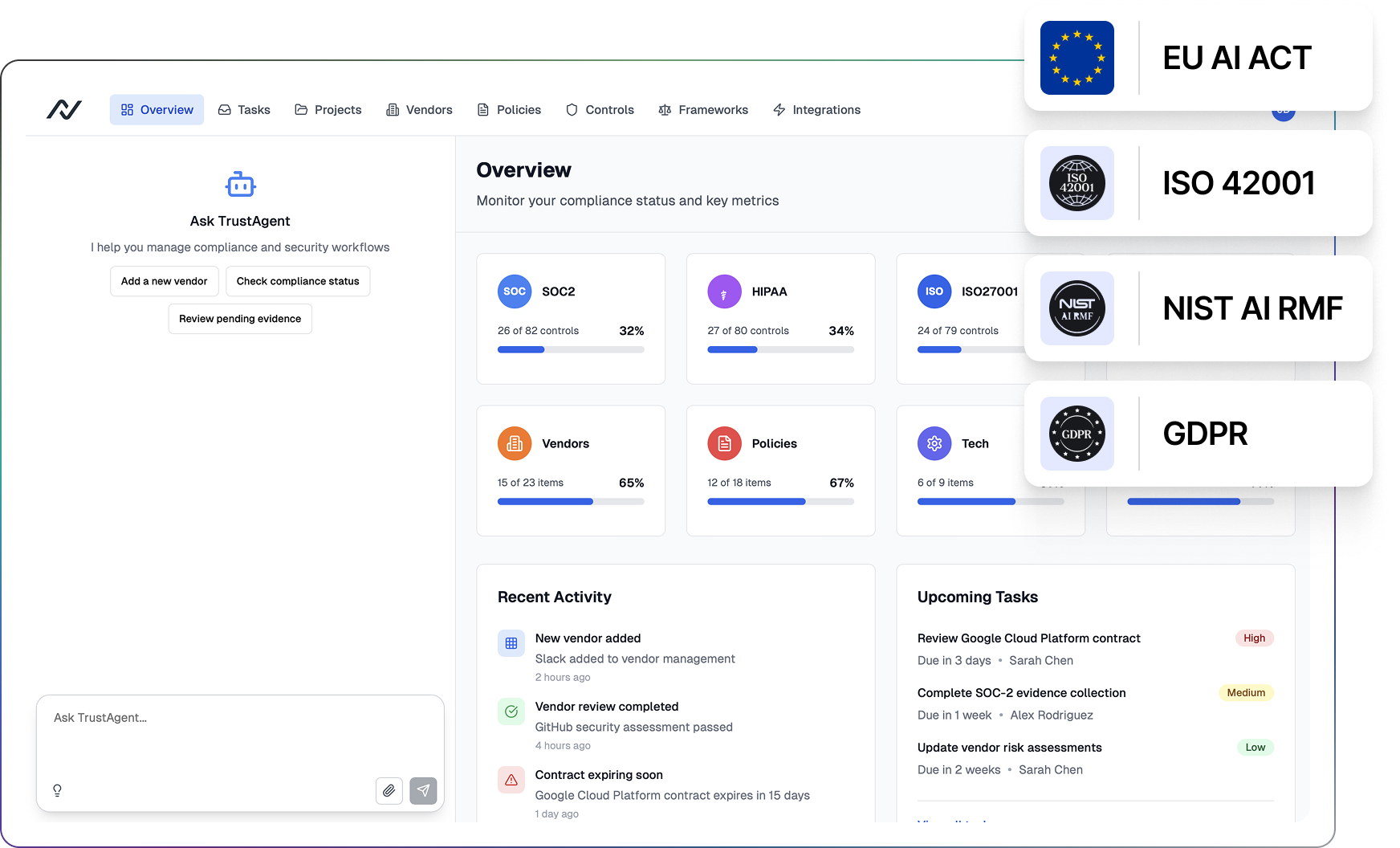

Visibility and detection with Agent Security Posture

Agent Security Posture provides continuous runtime observability. It establishes behavioral baselines for every agent, tracking request frequency, data access, and interaction patterns. When deviations occur, such as repeated unauthorized calls or abnormal query volumes, alerts are triggered immediately for investigation.

This real-time visibility closes the loop between detection and response. Combined with policy enforcement from Guardian Agent and MCP Gateway, it ensures that anomalies are contained before they escalate into incidents.

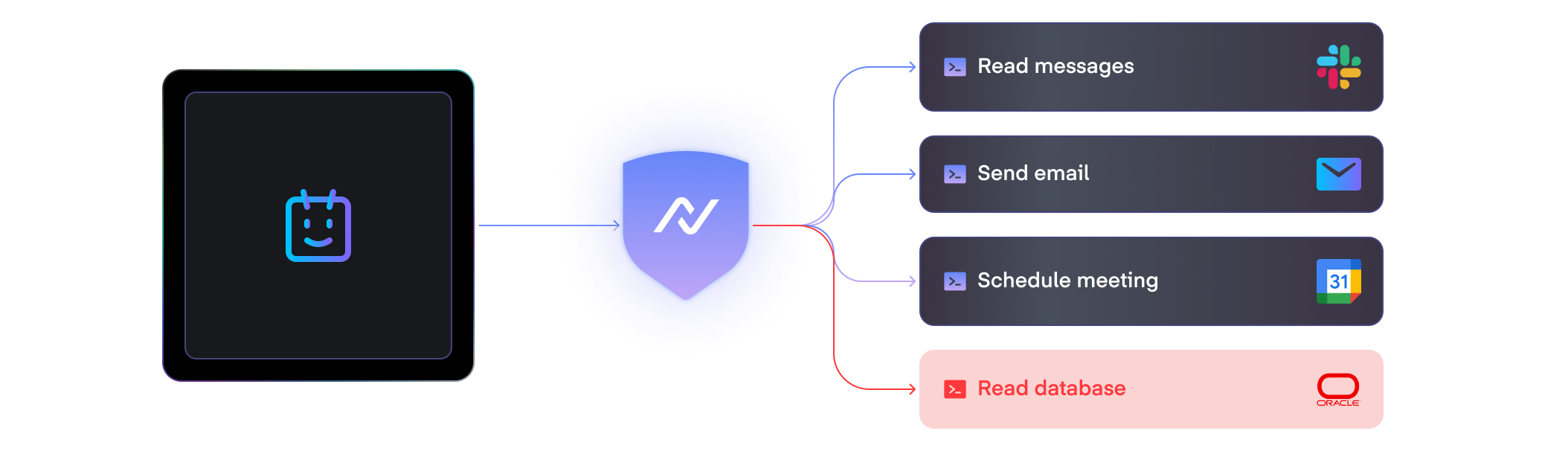

Controlled execution with MCP Gateway

The MCP Gateway governs how agents interact with external tools, APIs, and systems. It enforces sandboxed execution environments that restrict command scope and validate every call before it reaches production systems.

By mediating all tool and data exchanges, our MCP Gateway prevents privilege escalation and cross-tenant exposure. It also supports policy-based approvals, so sensitive actions such as financial transactions or system configuration changes, require explicit validation before execution.

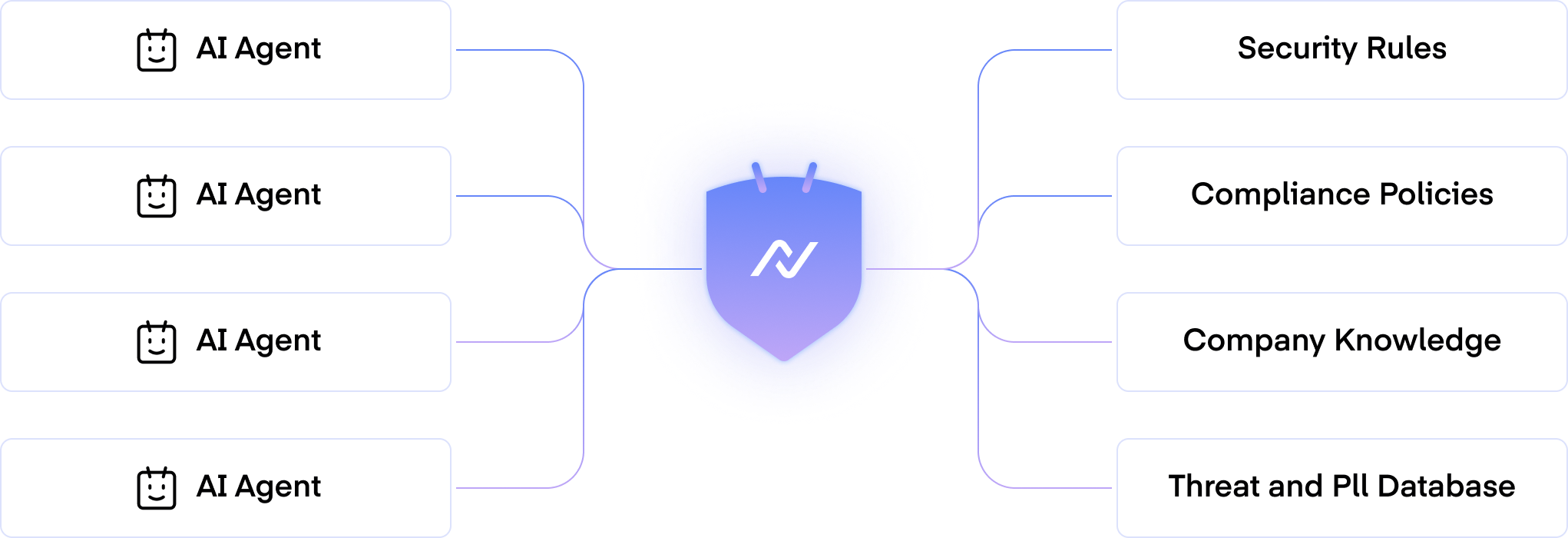

Identity and access control with Guardian Agents

Guardian Agents are a force of security agents that supervise and control AI agents’ actions. They secure multi-agent systems and tool-calling workflows against injections, abuse, and unintended actions in real time.

Each AI agent is treated as a verified nonhuman identity with its own credentials, authentication, and policy scope. Guardian Agents enforce least privilege across sessions, validate intent and parameters before tool calls, and isolate risky actions until checks pass.

Every action is logged for attribution, creating a clear audit trail for investigations and compliance reviews. This identity-first approach lets security teams manage agents with the same rigor as human identities.

Runtime security with Generative Application Firewall (GAF)

The Generative Application Firewall is the real time defense layer for agent I/O and tool calls. It inspects prompts, streaming responses, and action requests to block prompt injection, context hijacking, bot traffic, and abusive patterns. It also applies masking and moderation so sensitive data does not leak in or out, and it enforces custom governance policies across applications and agents.

GAF runs at high throughput with low added latency and integrates across major model providers and enterprise stacks. It combines prompt protection, behavioral threat detection, bot mitigation, DLP, and rate limiting, and it supports flexible deployment with plugin based extensions. All enforcement decisions are logged to strengthen audits and response.

Policy enforcement with Agent Guardrails

Agent Guardrails define what an agent is allowed to do and when. They capture allowed goals, tool scopes, retrieval limits, output schemas, and approval paths as reusable policies. Guardrails are checked before and after each action to validate parameters, verify intent, and constrain high impact operations such as refunds, file transfers, and data exports.

Guardrails work with Guardian Agents and the MCP Gateway to keep autonomy useful while maintaining safety. On violations, they block, auto correct, or request approval, and they record the decision with full context so you can trace why an action was allowed or denied in production.

Securing the agent lifecycle

AI agent security is not a one-time setup. It extends throughout the agent’s lifecycle, from development and deployment to ongoing monitoring and eventual retirement. Each stage introduces its own set of risks that must be managed systematically.

Development and pre-deployment

The foundation of security begins before an agent ever runs in production. Teams should conduct threat modeling to identify possible attack vectors such as prompt manipulation, unsafe tool access, or data leakage. Static code analysis and dependency scanning help detect vulnerabilities early.

Functional testing should include red teaming to simulate real-world adversarial prompts and abnormal agent behavior. Pre-deployment evaluations confirm that policies, access rules, and safety filters perform as expected under stress.

Runtime controls and observability

Once agents are live, continuous visibility becomes essential. Security teams should track metrics such as response latency, tool calls, and system access patterns to detect deviations.

Session-level tracing and logging enable attribution, identifying which agent performed each action and under what context. Runtime controls like rate limiting, anomaly detection, and isolation policies help contain unexpected behavior before it escalates.

Agents must also have clear recovery procedures. If one instance becomes unstable or compromised, it can be rolled back safely to a verified version without affecting others.

Post-deployment governance

Governance ensures that agents continue to operate within policy as they evolve. NeuralTrust Agents for Security automate much of this work by monitoring regulations, updating policies, mapping controls to frameworks, and collecting audit evidence as part of daily operations. The platform supports AI-specific frameworks such as the EU AI Act, NIST AI RMF, ISO 42001, OWASP LLM Top 10, and MITRE ATLAS, and connects written policies to technical controls with real-time enforcement.

Retraining and release cycles should include versioned documentation, change logs, and validation that new models have not introduced vulnerabilities. Agents for Security produce exportable, audit-ready reports, attach evidence to policies and controls, and automate reviews, approvals, and reporting based on real-time events. This makes it easier to prove compliance, revert safely when needed, and retire agents securely by decommissioning credentials, dependencies, and stored data.

Future trends in AI agent security

As AI agents become deeply integrated into enterprise operations, their security models are evolving from static rule sets to dynamic, adaptive systems. Future architectures will blend identity management, behavioral analytics, and continuous validation to maintain trust across complex, multi-agent environments.

Agent identification at scale

Organizations will soon manage thousands of agents across business units, each with distinct roles and permissions. Centralized agent registries will emerge to track identities, ownership, and behavioral history. These registries will act as inventory systems for all deployed agents, allowing security teams to assess posture, revoke access, or quarantine suspicious ones in real time.

Transparent runtime governance

Traditional firewalls and static allowlists are insufficient for autonomous systems. Next-generation defenses will rely on policy engines that enforce operational boundaries dynamically. They will evaluate intent, context, and risk before approving each agent action, ensuring compliance and preventing cross-domain escalation.

Cross-agent trust management

In multi-agent environments, security will depend on how agents verify and cooperate with one another. Mutual authentication protocols and shared context verification will enable secure collaboration without central intermediaries. This trust model will prevent malicious or compromised agents from influencing others through data sharing or coordinated actions.

Continuous validation and red teaming

Static testing cannot keep up with evolving adversarial techniques. Organizations will adopt automated red teaming pipelines that use adversarial agents to continuously test deployed systems. These tools will generate targeted prompts, simulate data poisoning, and expose vulnerabilities at scale before attackers can exploit them.

Combined with real-time telemetry, continuous validation will make AI agent ecosystems more resilient, measurable, and adaptive to emerging threats.

Conclusion

AI agents are transforming how organizations operate, but they also redefine what it means to be secure. Traditional defenses designed for static applications cannot protect autonomous systems that make decisions, share data, and act on behalf of users.

AI Agent security is about more than preventing attacks. It is about creating resilient, observable ecosystems where agents remain aligned with their intended purpose, even under pressure. Achieving this requires a combination of zero-trust identity management, prompt and output sanitization, runtime monitoring, and continuous validation through red teaming and audits.

As the number of deployed agents grows, treating them as managed digital identities becomes essential. By embedding governance and measurement into every stage of the agent lifecycle, organizations can build secure, transparent systems that scale safely in production.