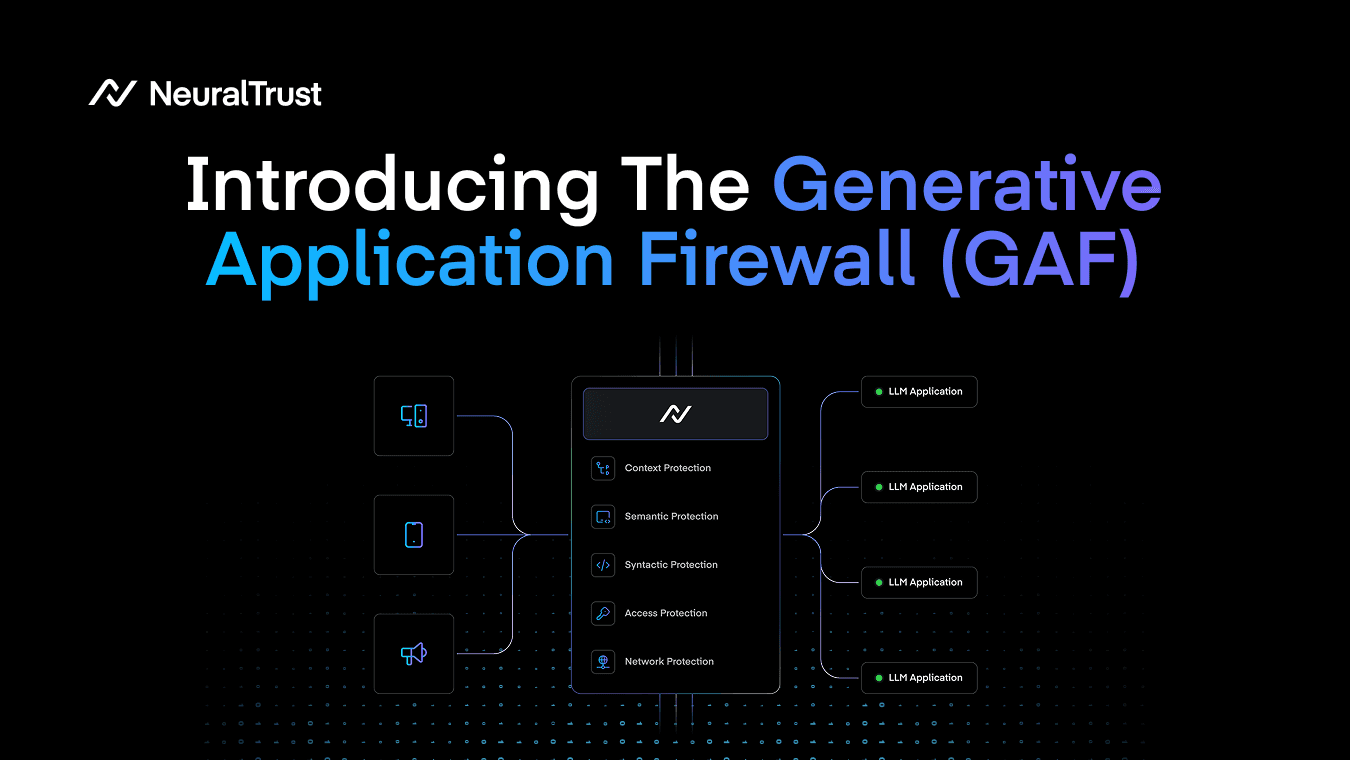

Today, we’re introducing the Generative Application Firewall (GAF), a new architectural layer for securing LLM-powered applications, including autonomous agents and their tool interactions.

Just like a Web Application Firewall (WAF) became essential once web applications introduced new attack surfaces, generative AI applications are now creating an equally urgent security gap. Traditional security layers were not designed for attacks that operate through natural language semantics, multi-turn manipulation, and tool-driven execution.

GAF is our answer to that gap: a unified enforcement layer that can orchestrate existing controls, fill missing protections, and provide a single point of policy enforcement for modern generative systems.

As generative AI moves from demos to production systems, organizations are deploying assistants for customer support, internal productivity, code generation, analytics, and increasingly, agents that can take actions. This is a major shift in how software behaves. But it also changes how systems fail.

The threats we’re seeing are not limited to classic web vulnerabilities. Generative AI introduces attacks like prompt injection, jailbreaking, and context manipulation, where the payload looks like a normal user request at the network level and even at the web application level. That’s why existing controls fall short:

- Network firewalls and IDS (Layer 3-4) inspect packet-level signals, but malicious intent can travel inside perfectly legitimate HTTPS requests.

- Traditional WAFs (Layer 4-7) look for structured patterns (SQL injection, XSS, signatures), but GenAI attacks often contain no obvious payload anomalies.

- Multi-turn attacks can build intent gradually, where each individual prompt looks harmless in isolation.

The result is a “semantic gap” in application security: a space where meaning is the attack surface, and where the old security stack has no visibility.

What is a Generative Application Firewall (GAF)?

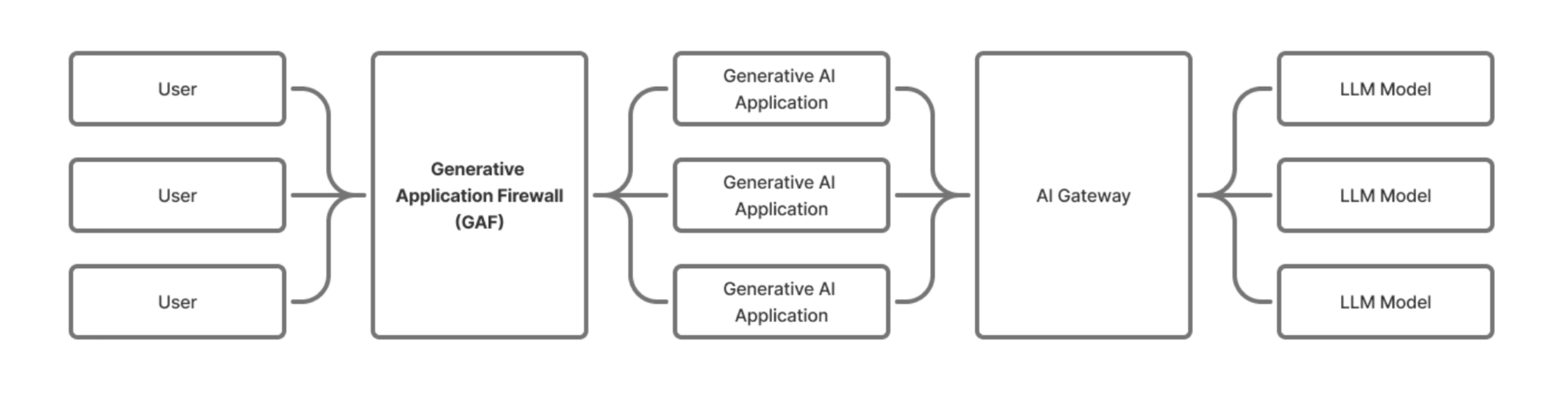

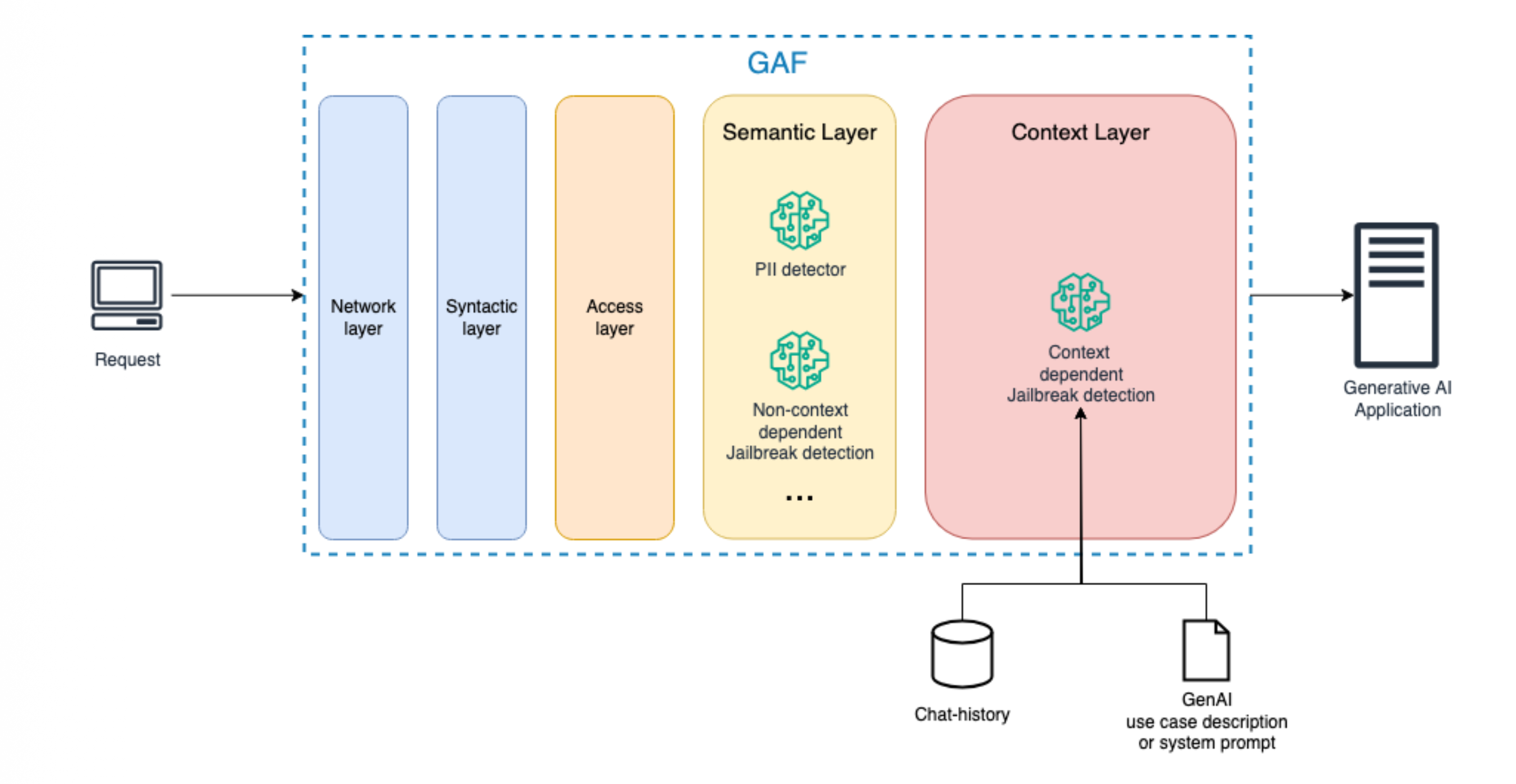

The Generative Application Firewall (GAF) is a security and control layer designed for applications that expose a natural language interface powered by LLMs. It sits between the user and the underlying LLM application, acting as a centralized enforcement point for security policies across the full generative stack.

Unlike prompt-level guardrails that evaluate a single request, GAF maintains a holistic view across users, sessions, and interactions over time, enabling stronger controls for both single-turn threats and multi-turn escalation strategies.

And critically, GAF is designed not only for “chat with an LLM”, but for agentic systems. It can intercept agent tool calls, enforce per-tool policies, and treat tool outputs as untrusted inputs to prevent indirect injection and data poisoning.

Figure 1: GAF as centralized enforcement across Network, Access, Syntactic, Semantic, and Context layers.

A layered security model for generative applications

A core principle of GAF is that securing natural language interfaces requires defense-in-depth across multiple layers, each targeting a different class of risks. We define five layers:

-

Network Layer: Rate limits, abuse prevention, IP filtering, and volumetric protections to mitigate flooding, scraping, DoS, and extraction attempts.

-

Access Layer: Authentication, authorization, role enforcement, and least-privilege policies for users and agents, including scoped tool access.

-

Syntactic Layer: Structural validation of inputs and outputs, schema enforcement for tool calls, format constraints, and protection against obfuscation and injection-like payload shaping.

-

Semantic Layer: Detection of meaning-based attacks that can be identified from a single prompt, including jailbreak patterns and injection attempts that rely on manipulative phrasing rather than code signatures.

-

Context Layer: Longitudinal protection against multi-turn jailbreaks and escalation strategies, tracking dialogue history, role intent, behavioral patterns, and agent planning loops over time.

This structure reflects what generative applications truly are: not just endpoints receiving requests, but interactive systems where intent evolves across turns and actions can propagate through tools.

Figure 2: The context layer is the most difficult part in defining a proper GAF architecture

A new standard layer for generative security

Generative AI is transforming software. But it’s also transforming the security perimeter. GAF provides a missing architectural layer for organizations that want to deploy generative applications safely, consistently, and at scale: not by patching one-off defenses, but by building a unified system of enforcement that understands language, context, and actions.

If you’re working on AI applications, agentic systems, or GenAI governance, we’d love to hear your feedback and collaborate on how this layer should evolve.

The complete research paper is available on arXiv.

Special thanks to our team of researchers at NeuralTrust, and to our elite endorsers for their support and contributions: Christos Kalyvas-Kasopatidis (University of the Aegean), Emre Isik (University of Cambridge), Idan Habler (OWASP GenAI Security Project), Jinwei Hu (University of Liverpool), Strahinja Janjusevic (MIT Computer Science and Artificial Intelligence Laboratory), Osvaldo Ramirez (Center for AI and Digital Policy), Tu Nguyen (Huawei), and Xuanlin Liu (Cloud Security Alliance).