Large Language Models (LLMs) have transformed artificial intelligence, becoming a cornerstone for various applications, including content generation, zero-shot classification, and complex linguistic tasks. Before LLMs, achieving such capabilities required training models tailored to specific tasks—an approach that lacked scalability and adaptability. Today, LLMs can process vast amounts of data, comprehend context, and generate coherent, creative responses, making them invaluable across industries.

A defining characteristic of LLMs is their ability to generate human-like text and insightful responses across diverse topics. However, this same creativity introduces challenges, as LLMs can deviate from intended discussions, engage in off-topic conversations, or even produce undesirable content. In enterprise applications, such as customer service chatbots, these unintended responses can be counterproductive or even harmful. As a result, organizations have sought ways to regulate LLM interactions, ensuring that responses align with business objectives and compliance requirements.

In this post, we’ll review two different approaches to regulating LLM interactions: LLM guardrails and AI gateways. Additionally, we’ll evaluate the top nine AI gateways through a rigorous comparison, analyzing four key variables: throughput (requests per second), average latency (milliseconds), P99 latency (milliseconds), and success rate.

Want to know which AI gateway is best for keeping your AI applications secure while maintaining AI governance?

Read this benchmark to discover the answer.

LLM Guardrails: What’s their role and which are their limitations?

One widely adopted approach to managing LLM behavior is the use of LLM guardrails—intermediary systems that regulate AI-generated outputs. These guardrails help enforce security policies, prevent issues like prompt injection, mitigate LLM security risks, and ensure AI-generated content remains within predefined boundaries. They also perform AI rate limiting and filter out toxic or off-topic responses, enhancing AI governance and compliance.

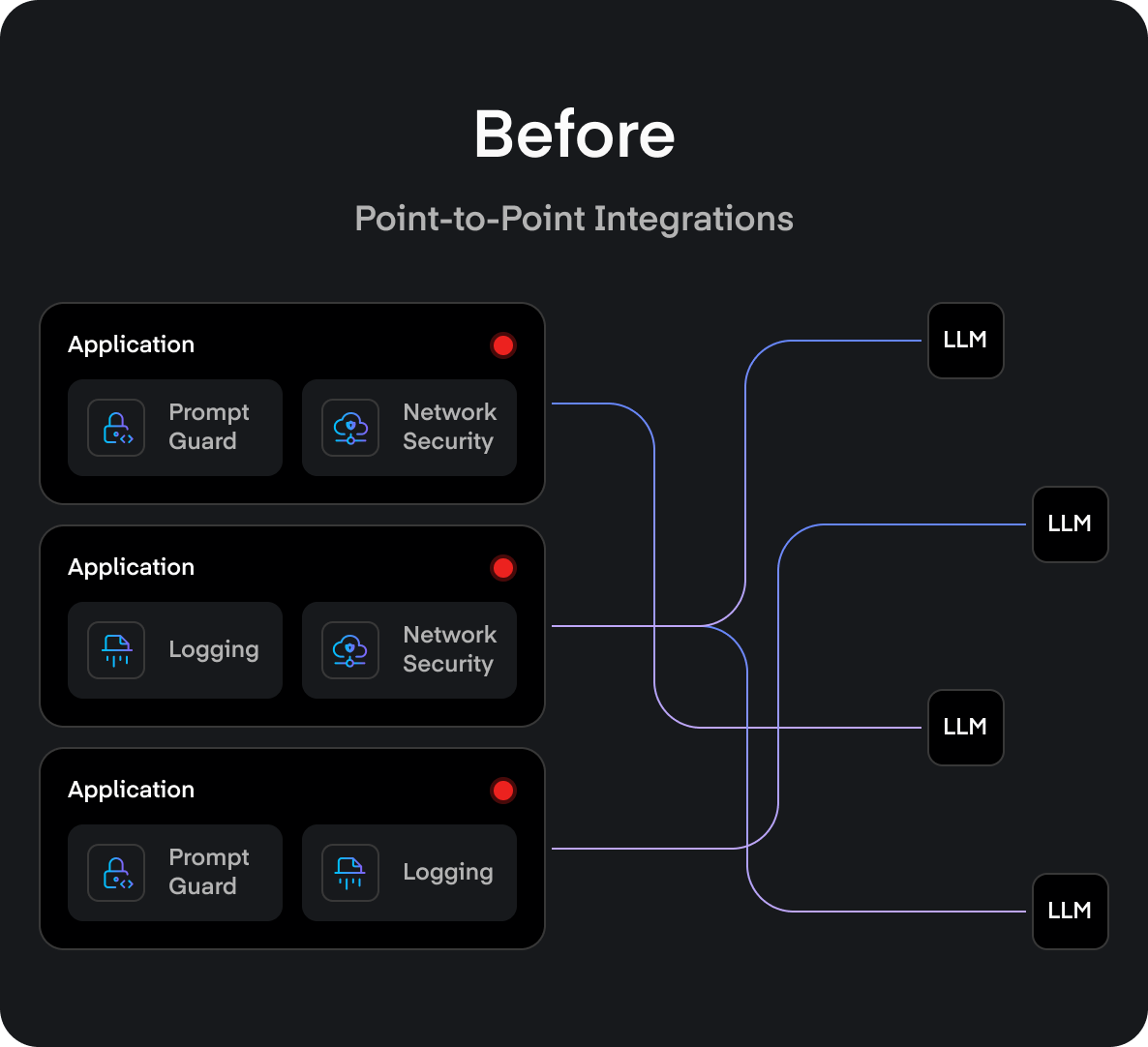

Below you can see how a guardrail architecture looks like:

While effective, traditional guardrails come with significant limitations. As companies scale AI adoption across multiple applications, manually configuring and managing LLM guardrails becomes increasingly complex and error-prone. Ensuring correct deployment, maintaining different versions, and consistently applying policies across diverse use cases introduces operational overhead and risks of misconfiguration.

Additionally, most guardrail implementations rely on static rule sets that struggle to keep pace with the evolving nature of AI threats and misuse patterns. This rigidity hinders scalability, creating fragmentation in AI security and governance.

To solve these challenges, we propose a new paradigm: the AI gateway architecture.

Introducing the AI gateway architecture

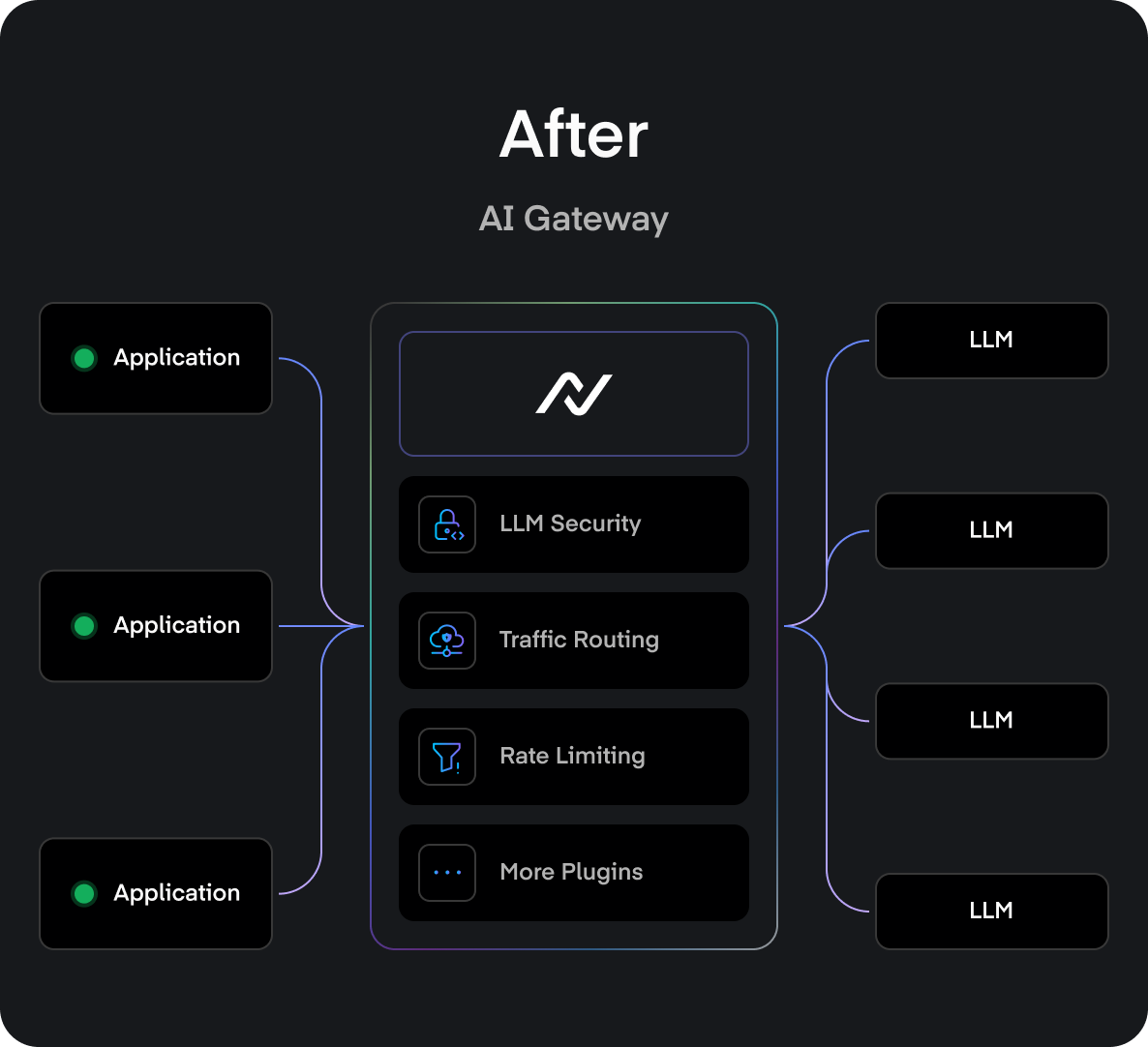

Unlike traditional LLM guardrails, which are implemented at the application level, the AI gateway works similarly to an API gateway but is designed specifically for AI use cases and it is deployed at the infrastructure level. This way, it intercepts calls to LLMs, enforcing policies and controls at an organizational scale rather than on a per-application basis.

This centralized approach ensures uniform enforcement of security policies, compliance measures, and AI governance across all enterprise AI solutions. Besides, it streamlines LLM deployment, eliminates inconsistencies, and reduces operational burdens associated with managing individual guardrails.

Last but not least, by integrating AI API management into a unified gateway, businesses can enforce scalable AI architecture policies, optimize API performance benchmarks, and dynamically update governance rules to adapt to emerging AI risks.

This is how an AI gateway architecture looks like:

By shifting AI security and compliance management to a centralized LLM gateway, organizations gain real-time control over AI interactions, ensuring that all AI-powered applications align with strategic goals and industry regulations.

As enterprises continue to embrace AI-driven innovation, adopting an AI gateway architecture will be key to achieving robust, scalable, and secure AI infrastructure.

Choosing the right AI gateway: Specialized vs. adapted solutions

The market for AI gateways remains relatively limited, with only a handful of specialized solutions available. Consequently, many organizations turn to API gateways as an alternative, leveraging their capabilities in request routing, load balancing, authentication, and AI rate limiting to manage AI-driven applications.

While AI gateways are designed to optimize LLM deployment, enhance AI infrastructure, and enforce LLM moderation, traditional API gateways offer flexibility and broad integration support. This makes them a viable choice for companies looking to adapt existing technology rather than implement a dedicated LLM gateway.

In this section, we’ll explore the specialized AI gateway options available, alongside API gateways that can be tailored to support enterprise AI solutions, ensuring seamless AI API management and scalable AI architecture.

Let’s start:

1. TrustGate

TrustGate is specifically designed to handle AI workloads, ensuring speed, efficiency, and adaptability. Unlike many existing solutions that repurpose traditional API gateways for AI applications, TrustGate is designed from the ground up to handle the unique demands of AI-driven workflows. Legacy API gateways often introduce unnecessary overhead, outdated features and architectural constraints that hinder AI performance rather than optimize it.

Most traditional API gateways are built for general-purpose request handling, which can lead to latency issues and scalability bottlenecks when managing AI workflows. These inefficiencies can degrade response times and limit AI system performance, making it difficult for enterprises to scale effectively.

By contrast, the AI Gateway Architecture is built specifically for high-performance AI interactions. It integrates advanced filtering, AI-specific rate limiting, and real-time monitoring tailored to LLM workloads. This ensures that AI applications process requests efficiently, securely, and in compliance with governance policies.

By leveraging this architecture, organizations gain greater control over their AI systems while maintaining robust governance and oversight. The result? Seamless AI integration, minimal operational bottlenecks, and scalable, high-performance deployments. As AI adoption continues to grow, purpose-built AI gateways set a new industry standard for secure and efficient AI-driven infrastructure.

2. Kong’s AI Gateway

Kong’s AI Gateway is a powerful extension of the Kong Gateway platform, offering a streamlined approach to AI integration and management within enterprises. The challenges of a fragmented AI ecosystem are addressed through a unified API layer, allowing developers to interact with multiple AI services using a consistent client codebase. This abstraction enhances development efficiency while providing centralized credential management, observability, and governance over AI data and usage.

A key feature of Kong’s AI Gateway is its dynamic request routing, which optimizes API calls based on cost efficiency, resource allocation, and response accuracy. Intelligent distribution of AI workloads ensures better performance and cost control for organizations.

The AI Gateway Architecture is built upon Kong Gateway’s API management framework and plugin extensibility, introducing AI-specific plugins for semantic routing, security, observability, acceleration, and governance. These specialized capabilities keep AI-driven applications efficient, scalable, and compliant with enterprise policies.

A straightforward installation process makes deployment quick and seamless. With a single command, Kong’s AI Gateway is up and running, providing effortless AI workload management.

3. Tyk API Gateway

Tyk is a cloud-native, open-source API Gateway designed to support REST, GraphQL, TCP, and gRPC protocols. Since its inception in 2014, the focus has remained on performance and scalability, offering rate limiting, authentication, analytics, and microservice patterns.

Its ‘batteries-included’ approach ensures that organizations have access to a comprehensive suite of tools for API management without feature lockout. Tyk’s compatibility with Kubernetes, through the Tyk Kubernetes Operator, further enhances its adaptability in modern cloud environments.

While Tyk excels in general API management, it is not specifically tailored for AI applications.

Recognizing the growing importance of AI, Tyk has introduced Montag.ai, a product aimed at empowering platform and product teams to adopt AI with robust governance. However, this appears to be an additional offering rather than an inherent feature of the Tyk API Gateway. Consequently, organizations seeking solutions specifically optimized for AI workloads might find Tyk’s core gateway lacking in specialized AI functionalities compared to competitors like TrustGate, which are purpose-built for AI use cases.

Tyk is conveniently packaged in Docker that takes care of the installation and can be up and running in minutes.

4. KrakenD

KrakenD is a high-performance, open-source API Gateway designed to facilitate the adoption of microservices architectures. It offers features such as API aggregation, traffic management, authentication, and data transformation, enabling organizations to build scalable and resilient systems.

KrakenD’s stateless, distributed architecture ensures true linear scalability, handling thousands of requests per second, which makes it suitable for high-demand environments.

While KrakenD excels in general API management, as in the case of Tyk, it is not specifically tailored for AI applications. Its primary focus remains on optimizing API performance and scalability, without dedicated features for AI workloads. Organizations seeking solutions specifically optimized for AI might find KrakenD lacking in specialized functionalities compared to competitors like TrustGate, which are purpose-built for AI use cases.

KrakenD is also conveniently packaged as a Docker image, uploaded in a public repository, so it is easy to install and run.

5. CloudFlare

Cloudflare’s AI Gateway is designed to provide developers with enhanced visibility and control over their AI applications. Integration with the AI Gateway allows applications to monitor usage patterns, manage costs, and handle errors more effectively.

The platform offers features such as caching, rate limiting, request retries, and model fallback mechanisms, all aimed at optimizing performance and ensuring reliability. Notably, developers can connect their applications to the AI Gateway with minimal effort, often requiring just a single line of code, which streamlines the integration process.

Cloudflare’s solution leverages its existing infrastructure to offer scalable and efficient AI workload management. Features like advanced caching and rate limiting reduce latency and control costs, providing a robust framework for developers looking to optimize their AI applications. This approach ensures that AI services remain responsive and cost-effective, aligning with the demands of modern AI-driven applications.

Cloudflare’s AI Gateway is a proprietary service that requires user registration. Due to this limitation, it has been excluded from our comparison, as performance metrics and efficiency are inherently tied to Cloudflare’s internal infrastructure, details of which are not publicly disclosed.

6. Apache Apisix

Apache APISIX is an open-source, cloud-native API gateway designed to manage microservices and APIs with high performance, security, and scalability. Built on NGINX and etcd, it offers dynamic routing, hot plugin updates, load balancing, and support for multiple protocols, including HTTP, gRPC, WebSocket, and MQTT. APISIX provides comprehensive traffic management features such as dynamic upstream, canary release, circuit breaking, authentication, and observability.

A notable feature of Apache APISIX is its built-in low-code dashboard, which provides a powerful and flexible UI for developers to manage and operate the gateway efficiently. The platform supports hot updates and hot plugins, enabling configuration changes without requiring restarts, which saves development time and reduces system downtime.

Multiple security plugins are available for identity authentication and API verification, including CORS, JWT, Key Auth, OpenID Connect (OIDC), and Keycloak, ensuring robust protection against malicious attacks.

Despite having similar dependencies as Vulcand, the documentation offers a straightforward setup process, allowing everything to be up and running with a single command, making it far more convenient compared to Vulcand’s setup.

7. Mulesoft

MuleSoft’s API Gateway is a powerful solution designed to manage, secure, and monitor API traffic across enterprise environments. It acts as a control point, allowing organizations to enforce policies, authenticate users, and analyze API usage.

By integrating seamlessly with Anypoint Platform, MuleSoft’s API Gateway provides a centralized way to apply security policies, rate limiting, and access control, ensuring that APIs are protected against unauthorized access and threats. Additionally, its robust analytics and logging capabilities help organizations track API performance and troubleshoot issues efficiently.

Including MuleSoft’s API Gateway in this comparison is not an option due to the nature of our benchmarking process. The tests were conducted locally against various open-source API gateways, ensuring a fair and consistent comparison across solutions with a similar deployment model. MuleSoft’s API Gateway, as part of a larger enterprise-grade platform, incorporates proprietary optimizations and cloud integrations, placing it in a different category. Therefore, direct performance or feature comparisons would not provide meaningful insights, as these technologies are designed for different use cases and deployment environments.

Benchmarking AI Gateways: A Rigorous Performance Evaluation

To ensure an objective comparison, we conducted a rigorous evaluation of each AI gateway by installing and running them individually as the sole gateway. Using the ‘hey’ HTTP load generator, we measured key performance metrics, including response time, throughput, latency, and success rate.

The benchmarking process was automated through a Bash testing script, which dynamically adjusted the target URL based on each gateway’s configuration. All tests were conducted locally under consistent hardware and network conditions to maintain reliability.

The script begins by defining color-coded output for clarity, then checks for the presence of the ‘hey’ tool, automatically installing it if missing. This ensures a standardized testing environment, enabling accurate performance comparisons across different AI gateway solutions.

Next, with the tool successfully installed, we simulate a load of 50 concurrent users hitting the tested gateway for 30 seconds.

The code for this process is shown below:

The only variable that changes between benchmarks is PROXY_URL, which must point to a valid URL for the gateway being tested.

AI Gateway Benchmark Results:

To ensure an objective and reliable comparison, we conducted our benchmark tests using GCP Compute Engine, deploying AI gateways on two different machine types:

- c2-standard-8: 8 vCPUs, 32 GB Memory

- c4-highcpu-8: 8 vCPUs, 16 GB Memory

This setup allowed us to assess how each gateway performs under different hardware constraints, providing insights into their scalability and efficiency across varying resource allocations.

Each AI gateway was installed and executed as the sole gateway on the test machine, ensuring isolated conditions. We used the ‘hey’ HTTP load generator to simulate 50 concurrent users over a 30-second period, measuring key performance metrics:

- Requests per second (throughput)

- Average response time (latency in milliseconds)

Performance Comparison:4

Machine type: c2-standard-8

| Gateway | Hardware Specs | Requests per second | Average Response |

|---|---|---|---|

| NeuralTrust | c2-standard-8 | 10404.9620 | 4.8ms |

| Kong | c2-standard-8 | 9881.7013 | 5.1ms |

| Tyk | c2-standard-8 | 9744.5448 | 5.1ms |

| KrakenD | c2-standard-8 | 9433.7572 | 5.3ms |

| Apache Apisix | c2-standard-8 | 5955.9482 | 8.4ms |

Machine type: c4-highcpu-8

| Gateway | Hardware Specs | Requests per second | Average Response |

|---|---|---|---|

| NeuralTrust | c4-highcpu-8 | 19758.5314 | 2.5ms |

| Kong | c4-highcpu-8 | 18169.9125 | 2.8ms |

| Tyk | c4-highcpu-8 | 17053.2029 | 2.9ms |

| KrakenD | c4-highcpu-8 | 16136.7723 | 3.1ms |

| Apache Apisix | c4-highcpu-8 | 10380.4362 | 4.8ms |

As we can see…

The benchmark results highlight that TrustGate outperforms all other API Gateways in both throughput and response times, proving itself as the fastest and most efficient AI-first solution. On both tested machine types, TrustGate demonstrated superior performance, handling significantly more requests per second while maintaining the lowest latency.

These results make one thing clear: when it comes to AI-first performance, scalability, and security, TrustGate is the best AI gateway on the market.

Key Takeaways and Final Insights

This benchmark underscores a critical point: traditional API gateways cannot keep up with AI-specific demands. While they offer general-purpose API management capabilities, they lack the performance optimizations required for high-speed, high-volume AI interactions.

In contrast, TrustGate was built from the ground up to handle AI workloads, ensuring minimal latency, maximum throughput, and seamless scalability. Its AI-first architecture eliminates the inefficiencies found in repurposed API gateways, making it the most effective solution for enterprises looking to deploy and govern AI models at scale.

Why does this matter?

- Performance: TrustGate processes significantly more AI requests per second with lower latency, ensuring real-time responsiveness.

- Scalability: Whether running on standard or high-performance machines, TrustGate maintains its lead in efficiency, making it ideal for enterprise AI applications.

- Security & Compliance: Unlike traditional gateways, TrustGate is designed to enforce AI governance policies while maintaining performance.

As AI adoption continues to expand, businesses cannot afford to rely on outdated, inefficient infrastructure. The future of AI deployment lies in AI-first solutions, and TrustGate sets the industry standard.

What’s next?

The benchmark reveals that dedicated AI gateways not only boost performance and scalability but also offer enhanced security compared to traditional guardrails. This shift toward centralized, purpose-built solutions simplifies managing AI workloads in an ever-evolving landscape.

Now, it's worth considering how these insights align with your own AI infrastructure. Could a dedicated AI gateway improve your AI system's security and efficiency while reducing complexity?

We are sure that the answer is "yes." If you want to learn more about how our AI gateway, TrustGate, can harness a purpose-built architecture—designed from the ground up to eliminate latency issues, streamline AI interactions, and deliver robust, scalable security—don't hesitate to book a demo today. No strings attached.